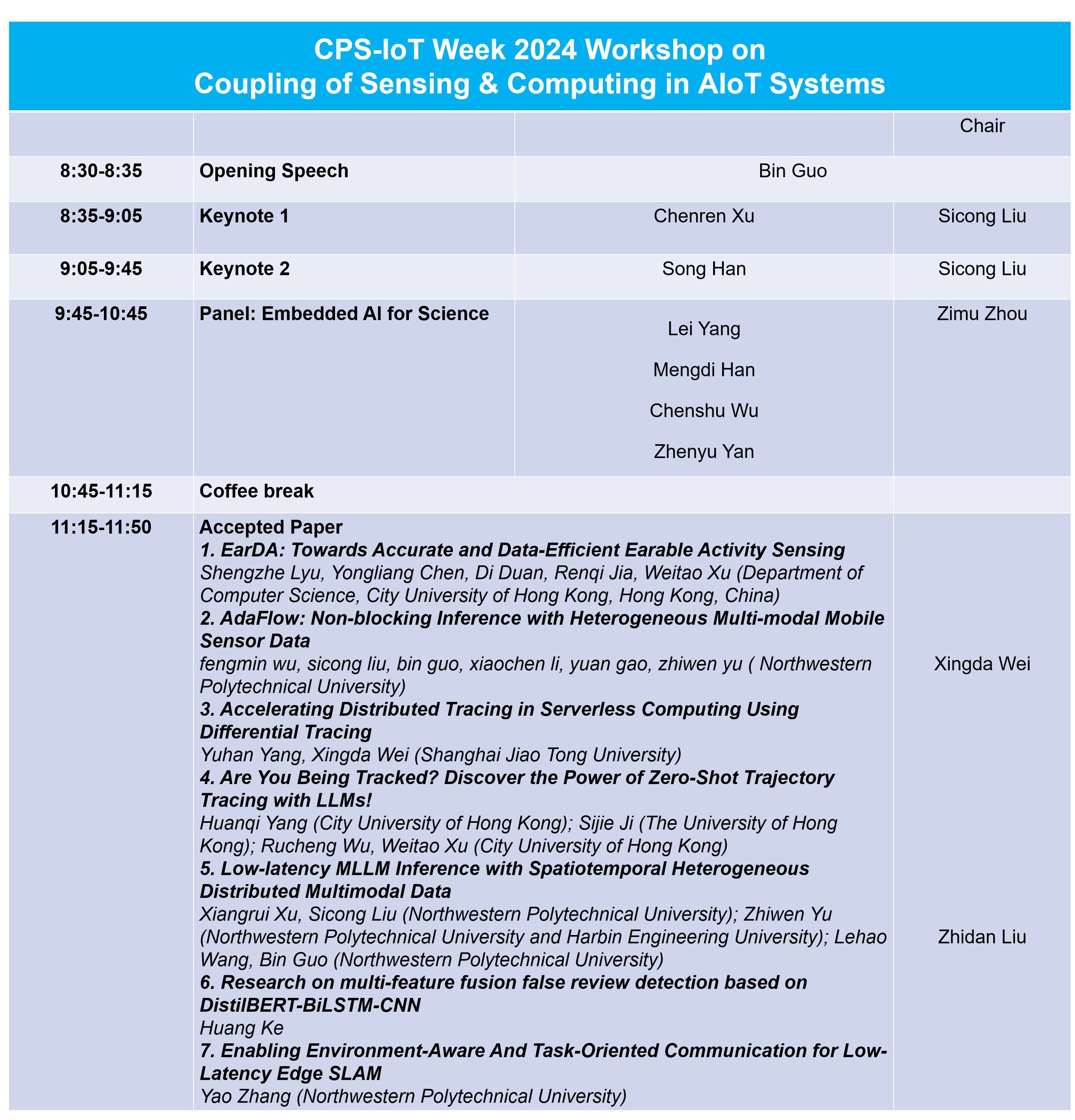

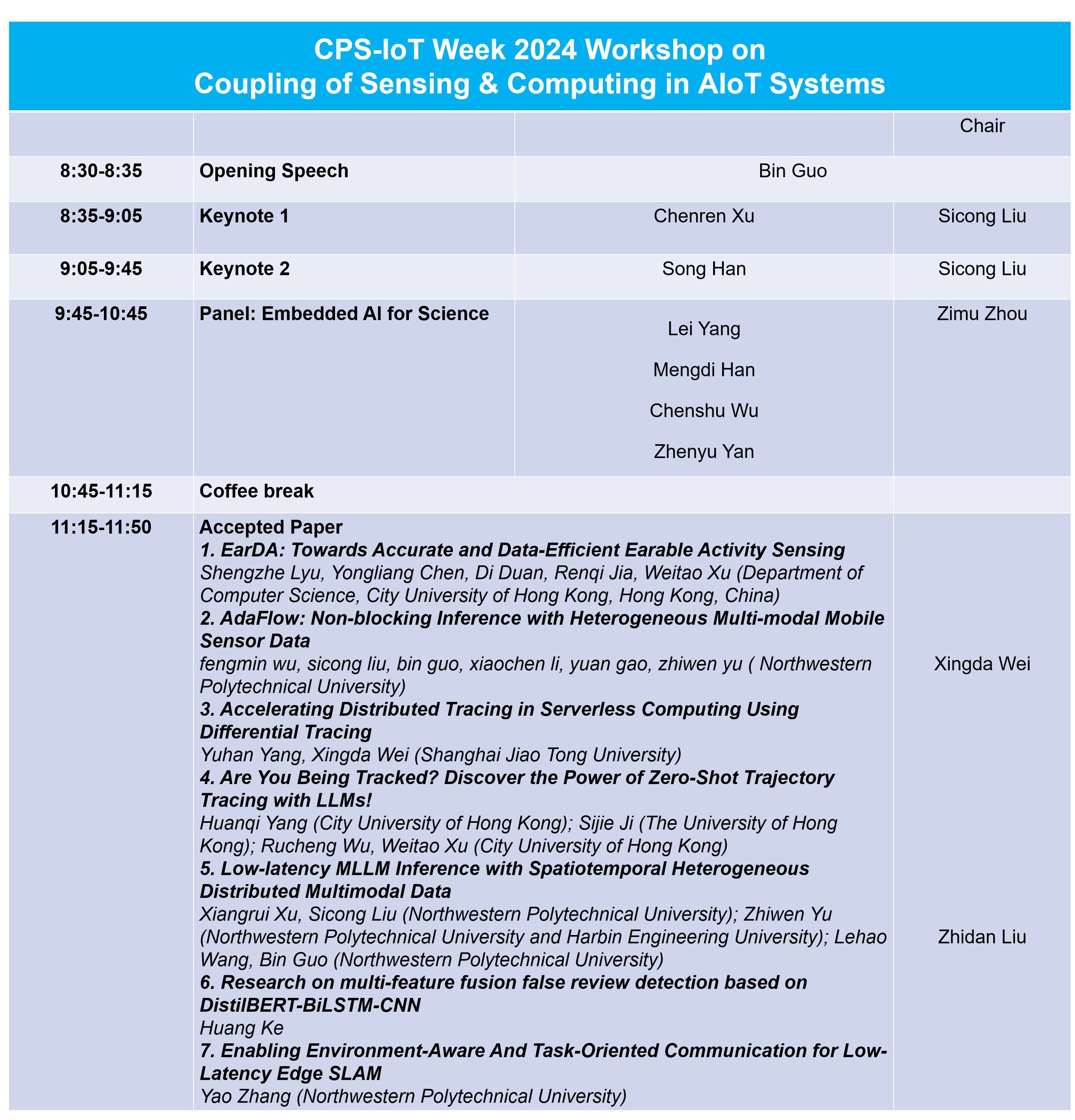

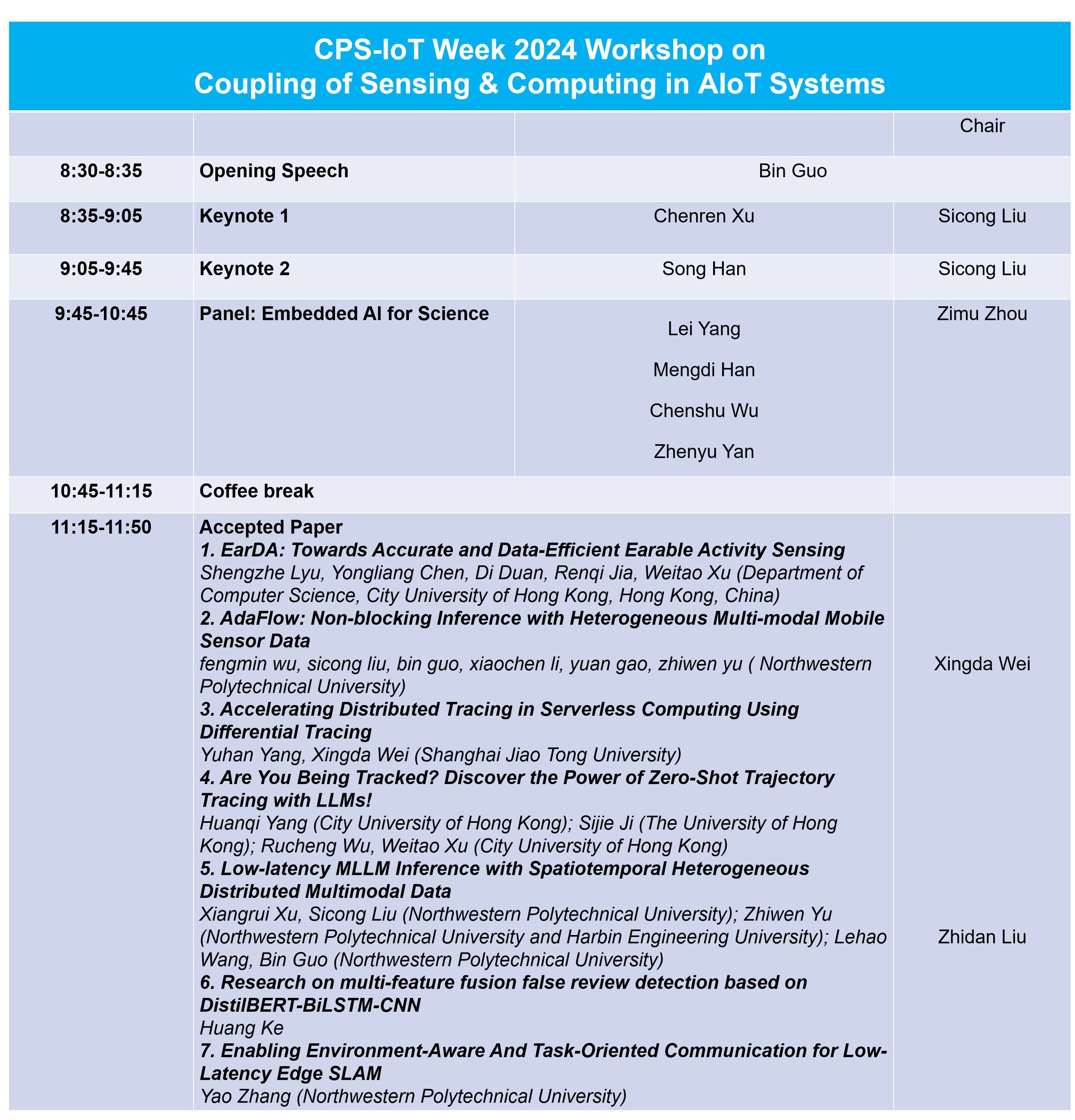

ABSTRACT:Physical Internet envisions renovating digital transportation network in a way to make physical goods delivered with efficiency, resilience and sustainability in modern logistics. One key factor towards Physical Internet is traceability – with accurate, timely, and reliable acquisition of goods status in full supply chain and its operational cycle – essentially empowering ubiquitous mobile connectivity in: 1) high density package location tracking in warehouse inventory, 2) high available V2X cooperation in (last-time) autonomous urban delivery, and 3) high mobility freight status streaming in networked transportation. This talk will introduce the design, implementation and deployment experience of our mobile RFID, VILD and multipath networking system for improving scalability, availability and reliability in logistics, vehicular and high-speed railway networks, towards the vision of (ubiquitous mobile connectivity in) Physical Internet.

Bin:Prof. Chenren Xu is a Boya Young Fellow Associate Professor (with early tenure) and Deputy Director of Institute of Networking and Energy-efficient Computing in the School of Computer Science at Peking University (PKU) where he directs Wireless AI for Science (WAIS) Lab. His research interests span wireless, networking and system, with a current focus on backscatter communication for low power IoT connectivity, future mobile Internet for high mobility data networking, and collaborative edge intelligence system for mobile and IoT computing. He earned his Ph.D. from WINLAB, Rutgers University, and worked as postdoctoral fellow in Carnegie Mellon University and visiting scholars in AT&T Shannon Labs and Microsoft Research. He has been active as TPC and OC members in top networking research venues including SIGCOMM, NSDI, MobiCom, MobiSys, SenSys and INFOCOM. He is an Editor of ACM IMWUT and on the Steering Committee of UbiComp and HotMobile. He is a recipient of NSFC Excellent Young Scientists Fund (2020), ACM SIGCOMM China Rising Star (2020), Alibaba DAMO Academy Young Fellow (2018) and CCF-Intel Young Faculty (2017) awards. His work has been featured in MIT Technology Review.

ABSTRACT:This talk presents efficient multi-modal LLM innovations across the full stack. I’ll first present VILA, a visual language model pre-training recipe beyond visual instruction tuning, enabling multi-image reasoning and in-context learning capabilities. Followed by SmoothQuant and AWQ for LLM quantization, and the TinyChat inference library. AWQ and TinyChat enable VILA 3B deployable on Jetson Orin Nano, bringing new capabilities for mobile vision applications. Second, I’ll present efficient representation learning, including EfficientViT for high-resolution vision, accelerating SAM by 48x without performance loss; and condition-aware neural networks for adding control to diffusion models. Third, I’ll present StreamingLLM, a KV cache optimization technique for long conversation and LongLoRA, using sparse, shifted attention for long-context LLM.

Bin:Song Han is an associate professor at MIT EECS. He received his PhD degree from Stanford University. He proposed the “Deep Compression” technique including pruning and quantization that is widely used for efficient AI computing, and “Efficient Inference Engine” that first brought weight sparsity to modern AI chips, which is a top-5 cited paper in 50 years of ISCA. He pioneered the TinyML research that brings deep learning to IoT devices. His team’s recent work on large language model quantization and acceleration (SmoothQuant, AWQ, StreamingLLM) improved the efficiency of LLM inference, adopted by NVIDIA TensorRT-LLM. Song received best paper awards at ICLR and FPGA, faculty awards from Amazon, Facebook, NVIDIA, Samsung and SONY. Song was named “35 Innovators Under 35” by MIT Technology Review, NSF CAREER Award, and Sloan Research Fellowship. Song was the cofounder of DeePhi (now part of AMD), and cofounder of OmniML (now part of NVIDIA). Song developed the EfficientML.ai course to disseminate efficient ML research.

The workshop addresses challenges in traditional IoT systems where the interaction between sensing and computing resources follows predefined patterns, leading to fixed collaboration modes and limited adaptability. This impedes effective coordination between perception and computation in highly dynamic scenarios. With the deep integration of artificial intelligence, edge computing, and intelligent hardware into the Internet of Things (IoT), Artificial Intelligence of Things (AIoT) emerges as a crucial development trend. Key to AIoT is the continuous enhancement of intelligent sensing and computing resources at the edge. On one side, the "edge" possesses rich modalities for intelligent perception, and on the other, the "end" features dynamically heterogeneous intelligent computing capabilities. The synergy between sensing and computing becomes pivotal in maximizing hardware capabilities, improving real-time system responsiveness, and empowering adaptive and self-evolving AIoT systems.

With the continuous development and miniaturization of smart chips, processors, and sensor devices, mobile devices are increasingly endowed with intelligent data processing and analysising capabilities. These devices can perform partial data processing and intelligent inference tasks under resource limitation, supporting the enhancement of real-time computing in AIoT (Artificial Intelligence of Things) and the protection of data privacy. The integration of IOT(Internet of Things) and AI (Artificial Intelligence) can better utilize local sensory data for computational optimization. Utilizing local sensory data, AI models can be updated personally, thereby improving the collection and analysis of information and enhancing the quality of human life. Ideally, computation and perception should be mutually complementary and efficiently collaborative. However, in reality, achieving an adaptive and reliable AIoT system that integrates sensing and computing remains challenging. Maintaining computational robustness in constantly changing environments, adapting to the resource limitation of IoT devices, updating AI models through local data, and adapting to the energy constraints of IoT devices are significant challenges. This workshop provides an opportunity for researchers and system developers to discuss the design, development, deployment, use of algorithms and models, distributed systems and algorithms, task scheduling, and other aspects in intelligent IoT systems. Papers on the theory, practice, and methodological issues related to intelligent IoT are welcome.

| General Chair |

Sicong Liu, Northwestern

Polytechnical University Zimu Zhou, City University of Hong Kong Bin Guo, Northwestern Polytechnical University |

|---|

| TPC Members |

Chenren Xu, Peking University Chenshu Wu, The University of Hong Kong Chris Xiaoxuan Lu, University of Edinburgh Longfei Shangguan, University of Pittsburgh Dong Ma, Delft University of Technology Olga Saukh, TU Grazbr Shijia Pan, UC Merced Wan Du, UC Merced Edith Ngai, The University of Hong Kong Guohao Lan, Delft University of Technology Xiuzhen Guo, Zhejiang University Meng Jin, Shanghai Jiao Tong university Xingda Wei, Shanghai Jiao Tong university Pengfei Wang, Dalian University of Technology Huihui Chen, Fuzhou University Zhidan Liu, Shenzhen University |

|---|