Abstract

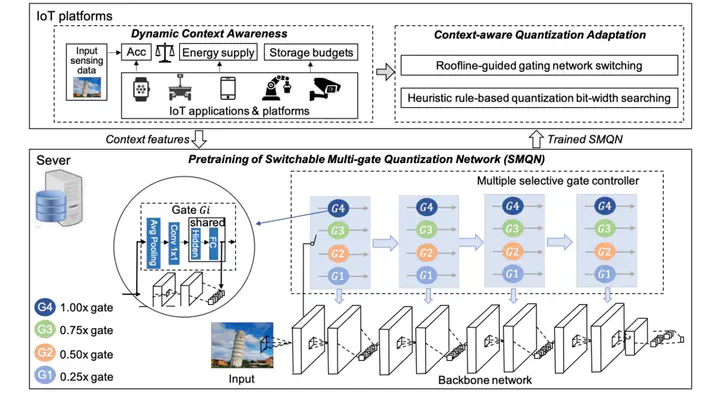

Artificial Intelligence of Things (AIoT) has recently accepted significant interests. Remarkably, embedded artificial intelligence (e.g., deep learning) on-device transforms IoT devices into intelligent systems that robustly and privately process data. Quantization technique is widely used to compress deep models for narrowing the resource gap between computation demands and platform supply. However, existing quantization schemes induce unsatisfaction for IoT scenarios since they are oblivious to dynamic changes of application context (e.g., battery and hierarchical memory availability) during the long-term operation. Subsequently, they will mismatch the user-desired resource efficiency and application lifetime. Also, to adapt to the dynamic context, we can neither accept the latency for model retraining with existing hand-crafted quantization nor the overhead for quantization bit width researching with prior on-demand quantization. This article presents a context-aware and self-adaptive deep model quantization (CAQ) system for IoT application scenarios. CAQ integrates a novel switchable multigate quantization framework, optimizing the quantized model accuracy and energy efficiency in diverse contexts. Based on the learned model, CAQ can switch among different gating networks in a context-aware manner and then adopt it to automatically capture the representation importance of various layers for optimal quantization bit-width selection. The experimental results show that CAQ achieves up to 50% storage savings with even 2.61% higher accuracy than the state-of-the-art baselines.